Filebeat的简介、安装、配置、Pipeline

一. 简介

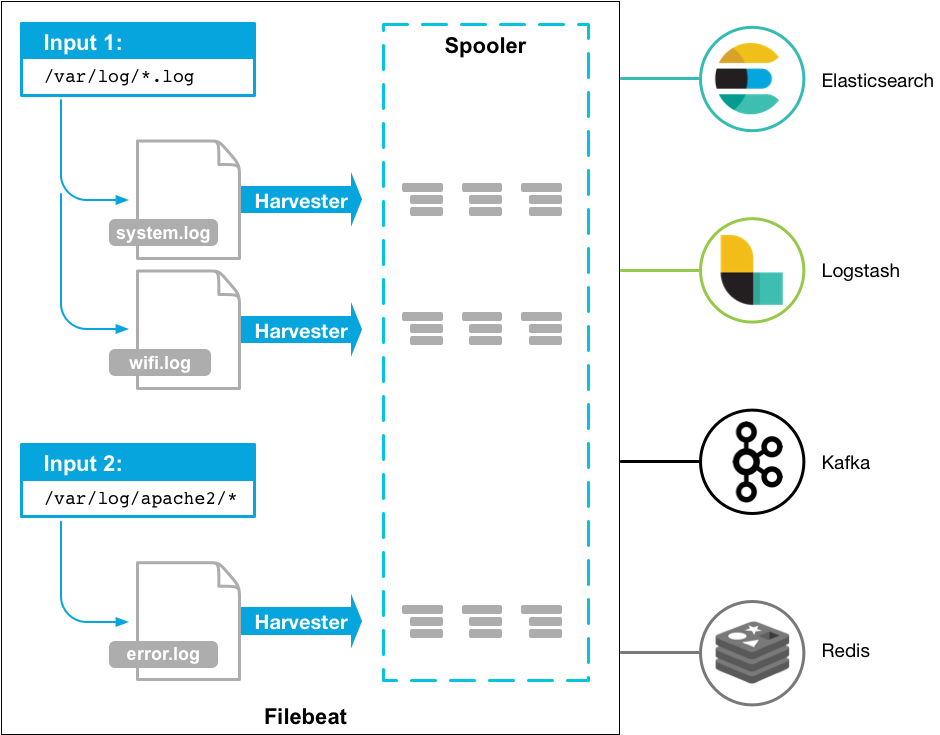

Filebeat由两个主要组件组成:

Inputs:

负责管理harvester并找到所有要读取的文件来源。如果输入类型为日志,则查找器将查找路径匹配的所有文件,并为每个文件启动一个harvester。每个Inputs都在自己的Go协程中运行

每个prospector类型可以定义多次

Harvesters:

- 一个harvester负责读取一个单个文件的内容,每个文件启动一个harvester。harvester逐行读取每个文件(一行一行地读取每个文件),并把这些内容发送到输出。在harvester正在读取文件内容的时候,文件被删除或者重命名了,那么Filebeat会续读这个文件。这就有一个问题了,就是只要负责这个文件的harvester没用关闭,那么磁盘空间就不会释放。默认情况下,Filebeat保存文件打开的状态直到close_inactive到达。

- 关闭harvester会产生以下结果:

- 如果在harvester仍在读取文件时文件被删除,则关闭文件句柄,释放底层资源。

- 文件的采集只会在scan_frequency过后重新开始

- 如果在harvester关闭的情况下移动或移除文件,则不会继续处理文件

二. 安装

默认的安装文件路径

| Type | Description | Default Location | Config Option |

|---|---|---|---|

| home | Home of the Filebeat installation. | path.home |

|

| bin | The location for the binary files. | {path.home}/bin |

|

| config | The location for configuration files. | {path.home} |

path.config |

| data | The location for persistent data files. | {path.home}/data |

path.data |

| logs | The location for the logs created by Filebeat. | {path.home}/logs |

path.logs |

YUM/RPM

[elastic-7.x]

name=Elastic repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

yum install filebeat-7.4.0

RPM下载地址:https://www.elastic.co/cn/downloads/beats/filebeat

yum localinstall -y filebeat-7*.rpm

安装文件路径

| Type | Description | Location |

|---|---|---|

| home | Home of the Filebeat installation. | /usr/share/filebeat |

| bin | The location for the binary files. | /usr/share/filebeat/bin |

| config | The location for configuration files. | /etc/filebeat |

| data | The location for persistent data files. | /var/lib/filebeat |

| logs | The location for the logs created by Filebeat. | /var/log/filebeat |

二进制文件

zip, tar.gz, tgz 压缩格式的二进制安装包,下载地址:https://www.elastic.co/cn/downloads/beats/filebeat

安装文件路径

| Type | Description | Location |

|---|---|---|

| home | Home of the Filebeat installation. | {extract.path} |

| bin | The location for the binary files. | {extract.path} |

| config | The location for configuration files. | {extract.path} |

| data | The location for persistent data files. | {extract.path}/data |

| logs | The location for the logs created by Filebeat. | {extract.path}/logs |

Filebeat命令行启动

/usr/share/filebeat/bin/filebeat Commands SUBCOMMAND [FLAGS]

| Commands | 描述 |

|---|---|

export |

导出配置到控制台,包括index template, ILM policy, dashboard |

help |

显示帮助文档 |

keystore |

管理secrets keystore. |

modules |

管理配置Modules |

run |

Runs Filebeat. This command is used by default if you start Filebeat without specifying a command. |

setup |

设置初始环境。包括index template, ILM policy, write alias, Kibana dashboards (when available), machine learning jobs (when available). |

test |

测试配置文件 |

version |

显示版本信息 |

| Global Flags | 描述 | |

|---|---|---|

-E "SETTING_NAME=VALUE" |

覆盖配置文件中的配置项 | |

--M "VAR_NAME=VALUE" |

覆盖Module配置文件的中配置项 | |

-c FILE |

指定filebeat的配置文件路径。路径要相对于`path.config | |

-d SELECTORS |

||

-e |

||

--path.config |

||

--path.data |

||

--path.home |

||

--path.logs |

||

--strict.perms |

示例:

/usr/share/filebeat/bin/filebeat --modules mysql -M "mysql.slowlog.var.paths=[/root/slow.log]" -e/usr/share/filebeat/bin/filebeat -e -E output.console.pretty=true --modules mysql -M "mysql.slowlog.var.paths=["/root/mysql-slow-sql-log/mysql-slowsql.log"]" -M "mysql.error.enabled=false" -E output.elasticsearch.enabled=false

SystemD启动

systemctl enable filebeat

systemctl start filebeat

systemctl stop filebeat

systemctl status filebeat

journalctl -u filebeat.service

systemctl daemon-reload

systemctl restart filebeat

Filebeat的SystemD配置文件

$ /usr/lib/systemd/system/filebeat.service

[Unit]

Description=Filebeat sends log files to Logstash or directly to Elasticsearch.

Documentation=https://www.elastic.co/products/beats/filebeat

Wants=network-online.target

After=network-online.target

[Service]

Environment="BEAT_LOG_OPTS=-e"

Environment="BEAT_CONFIG_OPTS=-c /etc/filebeat/filebeat.yml"

Environment="BEAT_PATH_OPTS=-path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat"

ExecStart=/usr/share/filebeat/bin/filebeat $BEAT_LOG_OPTS $BEAT_CONFIG_OPTS $BEAT_PATH_OPTS

Restart=always

[Install]

WantedBy=multi-user.target

| Variable | Description | Default value |

|---|---|---|

| BEAT_LOG_OPTS | Log options | -e |

| BEAT_CONFIG_OPTS | Flags for configuration file path | -c /etc/filebeat/filebeat.yml |

| BEAT_PATH_OPTS | Other paths | -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat |

三. Docker镜像

docker pull docker.elastic.co/beats/filebeat:7.4.0

docker pull filebeat:7.4.0

镜像中的安装文件路径

| Type | Description | Location |

|---|---|---|

| home | Home of the Filebeat installation. | /usr/share/filebeat |

| bin | The location for the binary files. | /usr/share/filebeat |

| config | The location for configuration files. | /usr/share/filebeat |

| data | The location for persistent data files. | /usr/share/filebeat/data |

| logs | The location for the logs created by Filebeat. | /usr/share/filebeat/logs |

Kubernetes部署

默认部署到kube-system命名空间

部署类型是Daemonset,会部署到每一个Node上

每个Node上的/var/lib/docker/containers目录会挂载到filebeat容器中

默认Filebeat会将日志吐到kube-system命名空间下的elasticsearch中,如果需要指定吐到其他elasticsearch中,修改环境变量

- name: ELASTICSEARCH_HOST value: elasticsearch - name: ELASTICSEARCH_PORT value: "9200" - name: ELASTICSEARCH_USERNAME value: elastic - name: ELASTICSEARCH_PASSWORD value: changeme

curl -L -O https://raw.githubusercontent.com/elastic/beats/7.4/deploy/kubernetes/filebeat-kubernetes.yaml

kubectl create -f filebeat-kubernetes.yaml

kubectl --namespace=kube-system get ds/filebeat

OKD部署

curl -L -O https://raw.githubusercontent.com/elastic/beats/7.4/deploy/kubernetes/filebeat-kubernetes.yaml

修改部署文件

securityContext:

runAsUser: 0

privileged: true

oc adm policy add-scc-to-user privileged system:serviceaccount:kube-system:filebeat

四. 配置

Filebeat的配置文件路径:/etc/filebeat/filebeat.yml

配置语法为YAML

| 配置项 | 描述 | 示例 |

|---|---|---|

| processors.* | Processors配置 | processors: - include_fields: fields: ["cpu"] - drop_fields: fields: ["cpu.user", "cpu.system"] |

| filebeat.modules: | Module配置 | filebeat.modules: - module: mysql error: enabled: true |

| filebeat.inputs: | Input配置 | filebeat.inputs: - type: log enabled: false paths: - /var/log/*.log |

| output.*: | Output配置 | output.console: enabled: true |

| path.* | 组件产生文件的位置配置 | path.home: /usr/share/filebeat path.data: ${path.home}/data path.logs: ${path.home}/logs |

| setup.template.* | Template配置 | |

| logging.* | 日志配置 | logging.level: info logging.to_stderr: false logging.to_files: true |

| monitoring.* | X-Pack监控配置 | monitoring.enabled: false monitoring.elasticsearch.hosts: ["localhost:9200"] |

| http.* | HTTP Endpoint配置 | http.enabled: false http.port: 5066 http.host: localhost |

| filebeat.autodiscover.* | Filebeat自动发现配置 | |

| 通用配置 | ||

| 全局配置项 | ||

| queue.* | 缓存队列设置 |

全局配置项

| 配置项 | 默认值 | 描述 | 示例 |

|---|---|---|---|

| registry.path | ${path.data}/registry | 注册表文件的根路径 | filebeat.registry.path: registry |

| registry.file_permissions | 0600 | 注册表文件的权限。Window下该配置项无效 | filebeat.registry.file_permissions: 0600 |

| registry.flush | 0s | filebeat.registry.flush: 5s | |

| registry.migrate_file | filebeat.registry.migrate_file: /path/to/old/registry_file | ||

| config_dir | filebeat.config_dir: path/to/configs | ||

| shutdown_timeout | 5s | filebeat.shutdown_timeout: 5s |

通用配置项

| 配置项 | 默认值 | 描述 | 示例 |

|---|---|---|---|

| name | name: "my-shipper" | ||

| tags | tags: ["service-X", "web-tier"] | ||

| fields | fields: {project: "myproject", instance-id: "57452459"} | ||

| fields_under_root | 如果该选项设置为true,则新增fields会放在根路径下,而不是放在fields路径下。自定义的field会覆盖filebeat默认的field。 | fields_under_root: true | |

| processors | 该配置项可配置以下Processors,详见 | ||

| max_procs |

配置示例

# Modules配置项

filebeat.modules:

- module: system

# 通用配置项

fields:

level: debug

review: 1

fields_under_root: false

# Processors配置项

processors:

- decode_json_fields:

# Input配置项

filebeat.inputs:

- type: log

# Output配置项

output.elasticsearch:

output.logstash:

五. Input插件类型

Input类型

| 类型 | 描述 | 配置示例 |

|---|---|---|

| Log | 从日志文件中读取每一行 | filebeat.inputs: - type: log paths: - /var/log/messages - /var/log/*.log |

| Stdin | filebeat.inputs: - type: stdin |

|

| Container | filebeat.inputs: - type: container paths: - '/var/lib/docker/containers//.log' |

|

| Kafka | filebeat.inputs: - type: kafka hosts: - kafka-broker-1:9092 - kafka-broker-2:9092 topics: ["my-topic"] group_id: "filebeat" |

|

| Redis | filebeat.inputs: - type: redis hosts: ["localhost:6379"] password: "${redis_pwd}" |

|

| UDP | filebeat.inputs: - type: udp max_message_size: 10KiB host: "localhost:8080" |

|

| Docker | filebeat.inputs: - type: docker containers.ids: - 'e067b58476dc57d6986dd347' |

|

| TCP | filebeat.inputs: - type: tcp max_message_size: 10MiB host: "localhost:9000" |

|

| Syslog | filebeat.inputs: - type: syslog protocol.udp: host: "localhost:9000" |

|

| s3 | filebeat.inputs: - type: s3 queue_url: https://test.amazonaws.com/12/test access_key_id: my-access-key secret_access_key: my-secret-access-key |

|

| NetFlow | ||

| Google Pub/Sub |

六. Output插件类型

| 类型 | 描述 | 配置样例 |

|---|---|---|

| Elasticsearch | output.elasticsearch: hosts: ["https://localhost:9200"] protocol: "https" index: "filebeat-%{[agent.version]}-%{+yyyy.MM.dd}" ssl.certificate_authorities: ["/etc/pki/root/ca.pem"] ssl.certificate: "/etc/pki/client/cert.pem" ssl.key: "/etc/pki/client/cert.key" username: "filebeat_internal" password: "YOUR_PASSWORD" |

|

| Logstash | output.logstash: hosts: ["127.0.0.1:5044"] |

|

| Kafka | output.kafka: hosts: ["kafka1:9092", "kafka2:9092", "kafka3:9092"] topic: '%{[fields.log_topic]}' partition.round_robin: reachable_only: false required_acks: 1 compression: gzip max_message_bytes: 1000000 |

|

| Redis | output.redis: hosts: ["localhost"] password: "my_password" key: "filebeat" db: 0 timeout: 5 |

|

| File | output.file: path: "/tmp/filebeat" filename: filebeat #rotate_every_kb: 10000 #number_of_files: 7 #permissions: 0600 |

|

| Console | output.console: pretty: true |

|

| Cloud |

七. Processors插件

配置语法

processors:

- if:

<condition>

then:

- <processor_name>:

<parameters>

- <processor_name>:

<parameters>

...

else:

- <processor_name>:

<parameters>

- <processor_name>:

<parameters>

可以再Input中添加Processor

- type: <input_type>

processors:

- <processor_name>:

when:

<condition>

<parameters>

条件语法

-

equals: http.response.code: 200 -

contains: status: "Specific error" -

regexp: system.process.name: "foo.*" range:The condition supportslt,lte,gtandgte. The condition accepts only integer or float values.range: http.response.code: gte: 400-

network: source.ip: private destination.ip: '192.168.1.0/24' destination.ip: ['192.168.1.0/24', '10.0.0.0/8', loopback] -

has_fields: ['http.response.code'] -

or: - <condition1> - <condition2> - <condition3> ... ----------------------------- or: - equals: http.response.code: 304 - equals: http.response.code: 404 -

and: - <condition1> - <condition2> - <condition3> ... ----------------------------- and: - equals: http.response.code: 200 - equals: status: OK ----------------------------- or: - <condition1> - and: - <condition2> - <condition3> -

not: <condition> -------------- not: equals: status: OK

支持的Processors

| 类型 | 作用 | 配置样例 |

|---|---|---|

add_cloud_metadata |

||

add_docker_metadata |

processors: - add_docker_metadata: host: "unix:///var/run/docker.sock" |

|

add_fields |

processors: - add_fields: target: project fields: name: myproject id: '574734885120952459' |

|

add_host_metadata |

processors: - add_host_metadata: netinfo.enabled: false cache.ttl: 5m geo: name: nyc-dc1-rack1 location: 40.7128, -74.0060 continent_name: North America country_iso_code: US region_name: New York region_iso_code: NY city_name: New York |

|

add_kubernetes_metadata |

processors: - add_kubernetes_metadata: host: kube_config: ~/.kube/config default_indexers.enabled: false default_matchers.enabled: false indexers: - ip_port: matchers: - fields: lookup_fields: ["metricset.host"] |

|

add_labels |

processors: - add_labels: labels: number: 1 with.dots: test nested: with.dots: nested array: - do - re - with.field: mi |

|

add_locale |

processors: - add_locale: ~ processors: - add_locale: format: abbreviation |

|

add_observer_metadata |

||

add_process_metadata |

||

add_tags |

processors: - add_tags: tags: [web, production] target: "environment" |

|

community_id |

||

convert |

processors: - convert: fields: - {from: "src_ip", to: "source.ip", type: "ip"} - {from: "src_port", to: "source.port", type: "integer"} ignore_missing: true fail_on_error: false |

|

decode_base64_field |

||

decode_cef |

||

decode_csv_fields |

||

decode_json_fields |

||

decompress_gzip_field |

||

dissect |

processors: - dissect: tokenizer: "%{key1} %{key2}" field: "message" target_prefix: "dissect" |

|

dns |

||

drop_event |

processors: - drop_event: when: condition |

|

drop_fields |

processors: - drop_fields: when: condition fields: ["field1", "field2", ...] ignore_missing: false |

|

extract_array |

processors: - extract_array: field: my_array mappings: source.ip: 0 destination.ip: 1 network.transport: 2 |

|

include_fields |

processors: - include_fields: when: condition fields: ["field1", "field2", ...] |

|

registered_domain |

||

rename |

processors: - rename: fields: - from: "a.g" to: "e.d" ignore_missing: false fail_on_error: true |

|

script |

||

timestamp |

八. 采集注册文件解析

采集注册文件路径:/var/lib/filebeat/registry/filebeat/data.json

[{"source":"/root/mysql-slow-sql-log/mysql-slowsql.log","offset":1365442,"timestamp":"2019-10-11T09:29:35.185399057+08:00","ttl":-1,"type":"log","meta":null,"FileStateOS":{"inode":2360926,"device":2051}}]

source # 记录采集日志的完整路径

offset # 已经采集的日志的字节数;已经采集到日志的哪个字节位置

timestamp # 日志最后一次发生变化的时间戳

ttl # 采集失效时间,-1表示只要日志存在,就一直采集该日志

type:

meta

filestateos # 操作系统相关

inode # 日志文件的inode号

device # 日志所在磁盘的磁盘编号

硬盘格式化的时候,操作系统自动将硬盘分成了两个区域。

一个是数据区,用来存放文件的数据信息

一个是inode区,用来存放文件的元信息,比如文件的创建者、创建时间、文件大小等等

每一个文件都有对应的inode,里边包含了与该文件有关的一些信息,可以用stat命令查看文件的inode信息

> stat /var/log/messages

File: ‘/var/log/messages’

Size: 56216339 Blocks: 109808 IO Block: 4096 regular file

Device: 803h/2051d Inode: 1053379 Links: 1

Access: (0600/-rw-------) Uid: ( 0/ root) Gid: ( 0/ root)

Access: 2019-10-06 03:20:01.528781081 +0800

Modify: 2019-10-12 13:59:13.059112545 +0800

Change: 2019-10-12 13:59:13.059112545 +0800

Birth: -

2051为十进制数,对应十六进制数803